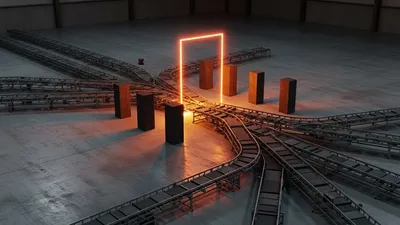

The Binary Revolution: Why Node.js Single Executable Applications Are Making Containers Obsolete

Containers are often overkill for tool distribution; it is time to master the native API that turns your Node.js projects into zero-dependency, standalone binaries.

You’ve been told for a decade that the only way to ship a reliable Node.js application is to wrap it in a Linux container. We’ve collectively accepted that to run a 50-line script on a server, we must first download a 400MB base image, layer on a gigabyte of node_modules, and manage a complex orchestration layer just to ensure the environment is "consistent." This is a lie—or at least, it’s a massive over-engineering of a simple problem. Docker is often a sledgehammer used to crack a nut, and for many use cases, Node.js Single Executable Applications (SEA) are about to make that sledgehammer look incredibly archaic.

The industry is shifting. We are moving away from the "ship the whole computer" philosophy of the 2010s and back toward the "ship the binary" elegance of the C and Go eras. With the introduction of the native SEA API in Node.js, we can finally bundle our code, our dependencies, and the Node.js runtime itself into a single, zero-dependency file. No npm install on the production server. No Docker daemon required. Just a binary that runs.

The Docker Tax and the Case for Binaries

I’ve spent far too many hours debugging why a CI/CD pipeline failed because a specific Debian mirror was down during a docker build. Containers solved the "it works on my machine" problem, but they introduced the "container overhead" problem.

When you ship a container, you’re shipping an entire operating system's worth of baggage. This includes package managers, shells, and libraries that your code will never touch, each representing a potential security vulnerability. If you're building a CLI tool, a microservice, or a small helper utility, the friction of managing image registries and container runtimes is a tax on your productivity.

Single Executable Applications (SEAs) flip the script. By producing a native binary, you get:

1. Instant Startup: No container initialization or filesystem layering.

2. Simplified Distribution: One file. Send it over scp, download it via curl, or put it on a USB stick.

3. Immutable Logic: The runtime and the code are fused. No one can "accidentally" change a file in node_modules on the server.

4. Reduced Attack Surface: There is no shell to exploit and no extraneous binaries to hijack.

The Mechanics: How SEAs Actually Work

Before the native SEA API, we had tools like pkg or nexe. They worked by hacking together a virtual filesystem. They were clever, but they were fragile and often broke with new Node.js releases. Node’s native SEA support is different. It uses a "blob" injection method.

The process follows a specific lifecycle:

1. The Source: You write your standard Node.js code.

2. The Preparation: You generate a "preparation blob" using a configuration file.

3. The Injection: You take a copy of the actual node executable and "inject" that blob into a specific section of the binary (using a tool called postject).

Let's look at how this works in practice.

Step 1: A Basic Application

Let’s create a simple CLI tool that fetches some data. This represents a typical "utility" that people unnecessarily containerize.

// src/app.js

const fs = require('node:fs');

async function run() {

console.log("--- The Binary Revolution ---");

console.log(`Current Time: ${new Date().toISOString()}`);

console.log(`Process ID: ${process.pid}`);

// Demonstrate that we can still use core modules

const data = { message: "Built as a standalone binary!" };

console.log("Data Payload:", data);

}

run().catch(err => {

console.error(err);

process.exit(1);

});Step 2: The Configuration

To tell Node.js how to package this, we need a JSON configuration file. This is the blueprint for our blob.

{

"main": "src/app.js",

"output": "sea-prep.blob",

"disableSentinel": false

}The disableSentinel flag is a security feature. If set to false, Node.js will verify that the binary hasn't been tampered with by checking for a specific marker.

Step 3: Generating the Blob

Now, we use the Node.js binary itself to create the preparation blob. This blob is essentially a pre-compiled state of your application code.

node --experimental-sea-config sea-config.jsonAfter running this, you’ll see a sea-prep.blob file in your directory. This is the "soul" of your application, but it still needs a "body" (the Node.js runtime) to live in.

Step 4: The Injection (The Fun Part)

This is where it gets interesting. We need to take the existing Node.js executable, copy it, and shove our blob inside it.

On macOS/Linux:

# 1. Copy the node binary

cp $(which node) my-custom-tool

# 2. Remove any existing signatures (macOS specific, but good practice)

# On Linux, you can skip codesign

codesign --remove-signature my-custom-tool 2>/dev/null || true

# 3. Inject the blob

# You'll need the 'postject' tool: npx postject my-custom-tool NODE_SEA_BLOB sea-prep.blob \

# --sentinel-fuse NODE_SEA_FUSE_fce680ab2cc467b1e072a8c546a11ff8

npx postject my-custom-tool NODE_SEA_BLOB sea-prep.blob \

--sentinel-fuse NODE_SEA_FUSE_fce680ab2cc467b1e072a8c546a11ff8On Windows (PowerShell):

# 1. Copy the node binary

copy (Get-Command node).Source my-custom-tool.exe

# 2. Inject the blob

npx postject my-custom-tool.exe NODE_SEA_BLOB sea-prep.blob `

--sentinel-fuse NODE_SEA_FUSE_fce680ab2cc467b1e072a8c546a11ff8The string NODE_SEA_FUSE_fce680ab2cc467b1e072a8c546a11ff8 looks like magic, but it’s a specific constant used by the Node.js source code to locate where the blob starts. It’s the "sentinel" that allows the runtime to realize, "Hey, I’m not just a standard Node.js binary; I have an app inside me."

Now, run ./my-custom-tool. It executes instantly. No node_modules folder, no package.json, just the binary.

Why This Beats "Just Installing Node"

You might argue, "Why not just install Node on the server and run the script?"

I’ve lived through the "version drift" nightmare. Server A has Node 16, Server B has Node 18, and a developer’s laptop has Node 20. Suddenly, a specific ESM feature or a fs/promises method behaves differently, or a dependency fails because of a C++ binding mismatch.

When you use SEA, the runtime is locked. If you build your SEA with Node 20.10.0, it will *always* run on Node 20.10.0 logic, regardless of what is installed on the host OS. It provides the same hermetic sealing as Docker, but without the virtualized network stack and the gigabytes of OS overhead.

Handling Dependencies (The esbuild Trick)

The SEA API expects a single entry point. If your project has a massive node_modules tree, you can’t just point the config at index.js and hope for the best. Node won't automatically traverse your dependencies and include them in the blob.

To solve this, we use a bundler like esbuild to flatten our entire project into a single file before generating the blob. This is the secret sauce that makes SEAs viable for complex applications.

# Install esbuild

npm install --save-dev esbuild

# Bundle everything into one file

npx esbuild src/app.js --bundle --platform=node --outfile=dist/bundled.jsThen, update your sea-config.json to point to dist/bundled.js:

{

"main": "dist/bundled.js",

"output": "sea-prep.blob",

"disableSentinel": false

}Now, your single binary contains your code *plus* every library you’ve imported from npm.

Real-World Gotchas: The Things They Don't Tell You

It’s not all sunshine and rainbows. If it were, Docker would have been deleted from GitHub yesterday. There are hurdles you need to be aware of.

1. The Cross-Compilation Wall

Unlike Go, which can compile for Windows from a Mac with a simple environment variable, Node.js SEAs are tied to the host architecture of the node binary you use as your base. If you want to build a Windows .exe from your Linux CI runner, you can't just copy the Linux node binary. You have to download the Windows node.exe first, then inject your blob into it.

I usually handle this in GitHub Actions by downloading the specific target binaries from the Node.js official mirrors:

- name: Download Windows Node Binary

run: curl -O https://nodejs.org/dist/v20.10.0/win-x64/node.exe2. Assets and Filesystem Access

Inside an SEA, __dirname and __filename behave differently. Since the code is coming from a memory blob, not a physical file on disk (in the traditional sense), code that relies on relative paths to find config files or images will fail.

The best way to handle assets is to either:

1. Bundle them as strings/Base64 inside your JavaScript (using esbuild loaders).

2. Use process.execPath to find where the binary is located and look for external assets relative to that path.

const path = require('node:path');

// This gets the directory where the binary is actually sitting

const binDir = path.dirname(process.execPath);

const configPath = path.join(binDir, 'config.json');3. Native Modules (.node files)

If your dependencies include native C++ modules (like sharp, sqlite3, or bcrypt), they won’t be bundled into the JS blob easily. These modules are compiled shared libraries. While you *can* bundle them, it requires a lot of manual lifting. For tools requiring native modules, I often find it's better to stick with a minimal Docker image or ensure the .node files are distributed alongside the binary.

Security: Beyond the Container

We often use containers for security, thinking they are "sandboxes." But a container with a root user and a poorly configured network is no sandbox.

A Single Executable Application provides a different kind of security: Integrity. On Windows and macOS, you can sign your SEA binary. Once signed, the OS will prevent the binary from running if it has been modified. This prevents the "malicious injection" vector where someone modifies a script on your server.

On macOS, after injecting the blob, you should re-sign the binary:

codesign --sign "Developer ID Application: Your Name" my-custom-toolThis makes your Node.js tool feel like a "real" application in the eyes of the operating system. No more "Developer cannot be verified" warnings for your internal CLI tools.

The Edge and the Future of Deployment

Where does this leave us? Is Docker dead? No. If you're running a massive Kubernetes cluster with complex networking requirements, containers are still the gold standard.

However, for the "Edge"—Lambda functions, IoT devices, CI/CD runners, and internal CLI tools—the container is increasingly a burden. When you're paying for execution time in a serverless environment, the "cold start" of a container is money down the drain. An SEA starts in milliseconds.

I recently replaced a 600MB Docker image used for a database migration task with a 70MB Node.js binary. The deployment time dropped from 4 minutes (pulling the image, extracting layers) to 5 seconds (downloading the binary).

How to Get Started

If you want to try this today, ensure you’re on Node.js 20 or 22 (LTS versions). The API is stable enough for production use, even if the flag is technically labeled "experimental" in some older v20 sub-versions.

Here is a quick checklist for your first "Binary Revolution" project:

1. Pick a tool: Choose a script that currently requires a node_modules folder to run.

2. Bundle it: Use esbuild to create a dist/index.js.

3. Blob it: Create the sea-config.json and generate the blob.

4. Inject it: Use postject to fuse it with a Node binary.

5. Test it: Run it on a machine that doesn't even have Node installed.

The first time you run a Node project on a "clean" machine by just double-clicking a single file, it feels like magic. It feels like the way software was supposed to be.

We’ve spent a decade building walls around our code with containers. It’s time to realize that sometimes, we don't need walls; we just need a better way to package the code itself. The "Binary Revolution" isn't about throwing away everything we've learned—it's about reclaiming the simplicity that we lost along the way. Stop shipping the whole computer. Just ship the app.