The V8 Memory Trap: Why Your 1MB JSON Is Consuming 10MB of RAM

A deep dive into V8 pointers, hidden classes, and string primitives to explain why your JavaScript objects are significantly heavier than their raw data suggests.

The V8 Memory Trap: Why Your 1MB JSON Is Consuming 10MB of RAM

Your file system is lying to you. Or, more accurately, your expectations about how data lives in memory are being sabotaged by the very engine designed to make your code run fast. You download a 1MB JSON blob from a REST API—maybe it’s a list of 10,000 users—and you think, "It's just a megabyte, my user's phone has 8GB of RAM, we're fine." Then you look at the Chrome Task Manager or a heap snapshot and realize that single JSON.parse() call just swallowed 10MB or 15MB of the heap.

This isn't a leak. It’s the "V8 Tax."

JavaScript is a high-level language, which is a polite way of saying it spends memory like a billionaire at a yacht auction to save you the trouble of manual memory management. To understand why your data bloats by 10x the moment it hits the heap, we have to look under the hood at how V8—the engine powering Chrome and Node.js—actually represents objects, strings, and pointers.

The Mirage of the "Small" Object

In C, a struct containing two 32-bit integers takes up exactly 8 bytes of memory. In JavaScript, an object like { x: 1, y: 2 } is a sprawling mansion of metadata.

When you create an object, V8 doesn't just store the values. It needs to know the "shape" of the object. This is called a Hidden Class (or "Map" in V8 terminology). Because JavaScript is dynamic, the engine needs a way to quickly look up where property x is located without doing a full hash-map lookup every single time.

The Anatomy of a JS Object

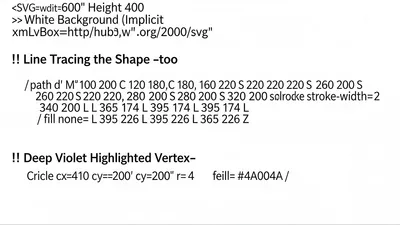

Every object in V8 has:

1. A Header: A pointer to the Hidden Class (Map) and information about the object's "properties" and "elements."

2. Properties: A pointer to an array of named properties.

3. Elements: A pointer to an array of indexed properties (like obj[0]).

4. In-object properties: A small amount of pre-allocated space to store values directly on the object for speed.

Even a completely empty object {} starts its life taking up roughly 28 to 56 bytes, depending on whether you're on a 32-bit or 64-bit system (and whether pointer compression is active).

Compare that to our 1MB JSON. If that JSON consists of 10,000 small objects, you aren't just paying for the data; you are paying the header tax 10,000 times.

// This looks tiny

const data = { id: 1024, status: "active" };

// In memory, it's:

// [Map Pointer (4-8 bytes)]

// [Properties Pointer (4-8 bytes)]

// [Elements Pointer (4-8 bytes)]

// [In-Object Property 'id' (8 bytes)]

// [In-Object Property 'status' (Pointer to String object: 8 bytes)]Hidden Classes: The Performance/Memory Trade-off

V8 tries to be smart. If you create 1,000 objects with the exact same keys in the exact same order, they all share the same Hidden Class. This is great for performance because the JIT (Just-In-Time) compiler can optimize machine code based on that shape.

However, if you start adding properties dynamically or changing the order of keys, V8 creates *new* Hidden Classes.

function User(id, name) {

this.id = id;

this.name = name;

}

const u1 = new User(1, "Alice");

const u2 = new User(2, "Bob");

// u1 and u2 share the same Map. Efficient!

const u3 = new User(3, "Charlie");

u3.extra = true;

// u3 now branches off into a new Map.

// This transition tree itself consumes memory.When you parse a giant JSON array, V8 creates these Maps on the fly. If your JSON structure is inconsistent—say, some objects have an email field and others don't—you end up with a ballooning number of Hidden Classes cached in the heap.

The Pointer Compression Reality

Modern V8 (since version 8.0) uses a technique called Pointer Compression. In a 64-bit environment, pointers are 8 bytes. But V8 noticed that most heaps don't actually exceed 4GB. So, it stores a 32-bit offset instead of a full 64-bit address, cutting the size of pointers in half.

This was a huge win, but it’s still "expensive." Consider a JSON array of 100,000 integers. In a Uint32Array, those 100,000 integers take exactly 400,000 bytes (0.4MB). In a standard JavaScript array, those numbers are often stored as "Smis" (Small Integers) which are tagged pointers.

If they are larger than 31 bits, they get "boxed" into HeapNumber objects. Now, instead of 4 bytes, each number takes:

1. A pointer in the array (4 bytes)

2. A HeapNumber object on the heap (another 12-16 bytes)

Suddenly, your 0.4MB of data is taking up 2MB.

Strings: The "One-Byte" vs "Two-Byte" Trap

JavaScript strings are notoriously memory-hungry. The ECMAScript spec says strings are UTF-16. That means every character takes 2 bytes.

"Hello" (5 chars) = 10 bytes? Not quite.

V8 uses an optimization: if a string only contains Latin-1 characters, it stores it as a "One-Byte" string. But the moment you introduce a single emoji or a non-Latin character, the *entire* string is promoted to 2-byte UTF-16.

But wait, there's more. V8 doesn't just store characters. It uses Cons-Strings and Sliced-Strings.

If you concatenate two large strings:

let big = longStringA + longStringB;V8 doesn't necessarily create a new, flat string in memory. It creates a "Cons-String"—a structure that just points to the two originals. This is fast, but it prevents the original strings from being garbage collected.

Even worse is string.substring(). In older versions of V8, a tiny substring would keep a reference to the massive original string to avoid copying. You might think you're only holding onto a 10-character ID, but you're actually pinning a 10MB log file in memory. (Modern V8 is better at this, but it still happens in certain edge cases).

Practical Example: Measuring the Bloat

Let's look at a concrete script to see this in action. We'll generate a 1MB string of JSON and compare its size in "raw bytes" vs. its size in the V8 heap.

const v8 = require('v8');

function getHeapChange(fn) {

const before = v8.getHeapStatistics().used_heap_size;

const result = fn();

const after = v8.getHeapStatistics().used_heap_size;

return {

consumed: (after - before) / 1024 / 1024,

result

};

}

// 1. Create a 1MB JSON string

const userCount = 12000;

const users = [];

for (let i = 0; i < userCount; i++) {

users.push({

id: i,

active: i % 2 === 0,

name: "User " + i,

uuid: "abc-def-ghi-" + i

});

}

const jsonString = JSON.stringify(users);

console.log(`JSON String Size: ${(jsonString.length / 1024 / 1024).toFixed(2)} MB`);

// 2. Parse it and see what happens to the heap

const { consumed, result } = getHeapChange(() => {

return JSON.parse(jsonString);

});

console.log(`Actual Heap Consumed: ${consumed.toFixed(2)} MB`);If you run this, you'll likely see the "Actual Heap Consumed" is 4x to 8x the size of the string. The JSON string itself is just a sequence of characters. The resulting object is a massive graph of pointers, Hidden Classes, and String objects.

Why JSON.parse is a Memory Spike

When you run JSON.parse(), V8 doesn't just build the final object. It needs temporary memory for the parsing process itself. It creates a "transient" representation. If you are parsing a 50MB JSON file, you might see a memory spike of 150MB or 200MB during the operation before the GC kicks in and settles things down.

In a constrained environment (like a Lambda function or a cheap VPS), this spike is often what triggers an OOM (Out of Memory) crash, even if the final object would have "just barely" fit.

How to Defeat the Tax Man

If you are dealing with massive amounts of data and you're hitting memory limits, you have to stop using plain objects.

1. Use TypedArrays for Numeric Data

If you have a million coordinates, do not store them as [{x, y}, {x, y}]. That’s an object-overhead nightmare. Use a Float32Array.

// Bad: Objects

const points = [{x: 1, y: 2}, {x: 3, y: 4}];

// Good: TypedArray

const points = new Float32Array([1, 2, 3, 4]);

// Access index i*2 for x, i*2 + 1 for y.The Float32Array takes exactly 4 bytes per number. Period. No Hidden Classes, no pointers, no headers for every item.

2. Flatten Your Data

Avoid deep nesting. Every level of nesting is another object header and another set of pointers. A flat array of strings is significantly cheaper than an array of objects containing one string each.

3. Use Maps for Dynamic Keys

If you are using an object as a dictionary where you frequently add/remove keys, use the native Map.

const dict = new Map();

dict.set('key', 'value');V8 handles Map differently than regular objects. While a Map has its own overhead, it doesn't suffer from the "Hidden Class explosion" that happens when you use an object as a dynamic hash map.

4. Process Streams, Don't Buffer

If you're in Node.js, don't JSON.parse(fs.readFileSync('big.json')). Use a streaming parser like stream-json. This allows you to process one "user" at a time, letting the Garbage Collector reclaim memory as you go, rather than loading the entire 10x-inflated blob into RAM at once.

The Bottom Line

V8's memory management is optimized for speed of access, not density of storage. It assumes that memory is cheap and developer time is expensive. For 90% of web apps, this is the right trade-off.

But when you're the one building the other 10%—the data-heavy dashboards, the real-time visualizations, or the heavy-duty backends—you can't afford to be naive. That 1MB JSON file is a Trojan horse; once it's inside the heap, it's ten times the size you thought it was. Keep your structures flat, use TypedArrays where possible, and always profile your heap, not just your file sizes.